库和配置设置

import os, sysfrom keras.models import Modelfrom keras.layers import Input, LSTM, GRU, Dense, Embeddingfrom keras.preprocessing.text import Tokenizerfrom keras.preprocessing.sequence import pad_sequencesfrom keras.utils import to_categoricalimport numpy as npimport matplotlib.pyplot as plt

BATCH_SIZE = 64

EPOCHS = 20

LSTM_NODES =256

NUM_SENTENCES = 20000

MAX_SENTENCE_LENGTH = 50

MAX_NUM_WORDS = 20000

EMBEDDING_SIZE = 100

数据集

Go. Va !Hi. Salut !Hi. Salut.Run! Cours !Run! Courez !Who? Qui ?Wow! Ça alors !Fire! Au feu !Help! À l'aide !Jump. Saute.Stop! Ça suffit !Stop! Stop !Stop! Arrête-toi !Wait! Attends !Wait! Attendez !Go on. Poursuis.Go on. Continuez.Go on. Poursuivez.Hello! Bonjour !Hello! Salut !

数据预处理

input_sentences = []output_sentences = []output_sentences_inputs = []count = 0

for line in open(r'/content/drive/My Drive/datasets/fra.txt', encoding="utf-8"):count += 1

if count > NUM_SENTENCES:break

if '\t' not in line:continue

input_sentence, output = line.rstrip().split('\t')output_sentence = output + ' <eos>'

output_sentence_input = '<sos> ' + outputinput_sentences.append(input_sentence)output_sentences.append(output_sentence)output_sentences_inputs.append(output_sentence_input)print("num samples input:", len(input_sentences))print("num samples output:", len(output_sentences))print("num samples output input:", len(output_sentences_inputs))

num samples input: 20000

num samples output: 20000

num samples output input: 20000

print(input_sentences[172])print(output_sentences[172])print(output_sentences_inputs[172])

I'm ill.Je suis malade. <eos>

<sos> Je suis malade.

标记化和填充

- 它将句子分为相应的单词列表

- 然后将单词转换为整数

Total unique words in the input: 3523

Length of longest sentence in input: 6

Total unique words in the output: 9561

Length of longest sentence in the output: 13

encoder_input_sequences.shape: (20000, 6)

encoder_input_sequences[172]: [ 0 0 0 0 6 539]

print(word2idx_inputs["i'm"])print(word2idx_inputs["ill"])

6

539

print("decoder_input_sequences.shape:", decoder_input_sequences.shape)print("decoder_input_sequences[172]:", decoder_input_sequences[172])

decoder_input_sequences.shape: (20000, 13)

decoder_input_sequences[172]: [ 2 3 6 188 0 0 0 0 0 0 0 0 0]

print(word2idx_outputs["<sos>"])print(word2idx_outputs["je"])print(word2idx_outputs["suis"])print(word2idx_outputs["malade."])

2

3

6

188

词嵌入

num_words = min(MAX_NUM_WORDS, len(word2idx_inputs) + 1)embedding_matrix = zeros((num_words, EMBEDDING_SIZE))for word, index in word2idx_inputs.items():embedding_vector = embeddings_dictionary.get(word)if embedding_vector is not None:embedding_matrix[index] = embedding_vector

print(embeddings_dictionary["ill"])

[ 0.12648 0.1366 0.22192 -0.025204 -0.7197 0.66147

0.48509 0.057223 0.13829 -0.26375 -0.23647 0.74349

0.46737 -0.462 0.20031 -0.26302 0.093948 -0.61756

-0.28213 0.1353 0.28213 0.21813 0.16418 0.22547

-0.98945 0.29624 -0.62476 -0.29535 0.21534 0.92274

0.38388 0.55744 -0.14628 -0.15674 -0.51941 0.25629

-0.0079678 0.12998 -0.029192 0.20868 -0.55127 0.075353

0.44746 -0.71046 0.75562 0.010378 0.095229 0.16673

0.22073 -0.46562 -0.10199 -0.80386 0.45162 0.45183

0.19869 -1.6571 0.7584 -0.40298 0.82426 -0.386

0.0039546 0.61318 0.02701 -0.3308 -0.095652 -0.082164

0.7858 0.13394 -0.32715 -0.31371 -0.20247 -0.73001

-0.49343 0.56445 0.61038 0.36777 -0.070182 0.44859

-0.61774 -0.18849 0.65592 0.44797 -0.10469 0.62512

-1.9474 -0.60622 0.073874 0.50013 -1.1278 -0.42066

-0.37322 -0.50538 0.59171 0.46534 -0.42482 0.83265

0.081548 -0.44147 -0.084311 -1.2304 ]

print(embedding_matrix[539])

[ 0.12648 0.1366 0.22192 -0.025204 -0.7197 0.66147

0.48509 0.057223 0.13829 -0.26375 -0.23647 0.74349

0.46737 -0.462 0.20031 -0.26302 0.093948 -0.61756

-0.28213 0.1353 0.28213 0.21813 0.16418 0.22547

-0.98945 0.29624 -0.62476 -0.29535 0.21534 0.92274

0.38388 0.55744 -0.14628 -0.15674 -0.51941 0.25629

-0.0079678 0.12998 -0.029192 0.20868 -0.55127 0.075353

0.44746 -0.71046 0.75562 0.010378 0.095229 0.16673

0.22073 -0.46562 -0.10199 -0.80386 0.45162 0.45183

0.19869 -1.6571 0.7584 -0.40298 0.82426 -0.386

0.0039546 0.61318 0.02701 -0.3308 -0.095652 -0.082164

0.7858 0.13394 -0.32715 -0.31371 -0.20247 -0.73001

-0.49343 0.56445 0.61038 0.36777 -0.070182 0.44859

-0.61774 -0.18849 0.65592 0.44797 -0.10469 0.62512

-1.9474 -0.60622 0.073874 0.50013 -1.1278 -0.42066

-0.37322 -0.50538 0.59171 0.46534 -0.42482 0.83265

0.081548 -0.44147 -0.084311 -1.2304 ]

创建模型

以下脚本创建空的输出数组:decoder_targets_one_hot = np.zeros((len(input_sentences),max_out_len,num_words_output),dtype='float32'

) decoder_targets_one_hot.shape

(20000, 13, 9562)

for i, d in enumerate(decoder_output_sequences):for t, word in enumerate(d):decoder_targets_one_hot[i, t, word] = 1

decoder_dense = Dense(num_words_output, activation='softmax')

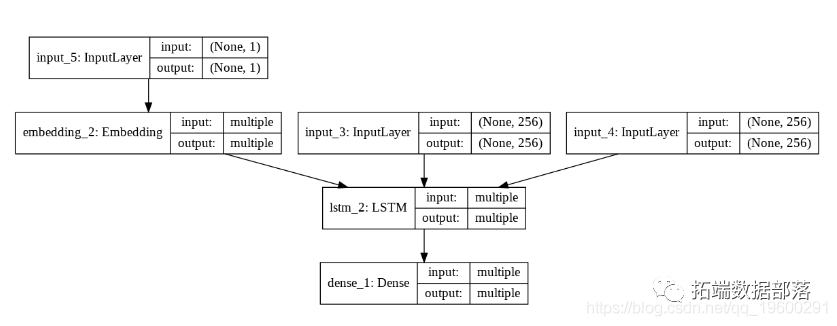

model = Model([encoder_inputs_placeholder,decoder_inputs_placeholder], decoder_outputs)

plot_model(model, to_file='model_plot4a.png', show_shapes=True, show_layer_names=True)

model.fit()

修改预测模型

输入在编码器/解码器的左侧,输出在右侧。

Step 1: ill -> Encoder -> enc(h1,c1) + <sos> -> Decoder -> je + dec(h1,c1)step 2: + je -> Decoder -> suis + dec(h2,c2)step 3: + suis -> Decoder -> malade. + dec(h3,c3)step 3: + malade. -> Decoder -> <eos> + dec(h4,c4)

输入在编码器/解码器的左侧,输出在右侧。

Step 1: ill -> Encoder -> enc(h1,c1) + <sos> -> Decoder -> y1(je) + dec(h1,c1)step 2: + y1 -> Decoder -> y2(suis) + dec(h2,c2)step 3: + y2 -> Decoder -> y3(malade.) + dec(h3,c3)step 3: + y3 -> Decoder -> y4(<eos>) + dec(h4,c4)

encoder_model = Model(encoder_inputs_placeholder, encoder_states)

decoder_state_input_h = Input(shape=(LSTM_NODES,))

decoder_inputs_single = Input(shape=(1,))...接下来,我们需要为解码器输出创建占位符:

decoder_outputs, h, c = decoder_lstm(...)

decoder_states = [h, c]decoder_outputs = decoder_dense(decoder_outputs)

decoder_model = Model()

plot_model(decoder_model, to_file='model_plot_dec.png', show_shapes=True, show_layer_names=True)

做出预测

idx2word_input = {v:k for k, v in word2idx_inputs.items()}idx2word_target = {v:k for k, v in word2idx_outputs.items()}

def translate_sentence(input_seq):

states_value = encoder_model.predict(input_seq)target_seq = np.zeros((1, 1))target_seq[0, 0] = word2idx_outputs['<sos>']eos = word2idx_outputs['<eos>']output_sentence = []for _ in range(max_out_len):return ' '.join(output_sentence)

测试模型

print('-')print('Input:', input_sentences[i])print('Response:', translation)

-Input: You're not fired.

Response: vous n'êtes pas viré.

-Input: I'm not a lawyer.

Response: je ne suis pas avocat.

结论与展望

发布者:股市刺客,转载请注明出处:https://www.95sca.cn/archives/109519

站内所有文章皆来自网络转载或读者投稿,请勿用于商业用途。如有侵权、不妥之处,请联系站长并出示版权证明以便删除。敬请谅解!